This is not That: Misclassifications

In this section we consider some interesting effects of erroneous classifications.

We’re not talking about identity verification for individuals, just whether we’re assigning the correct “group”

Friend or Foe

(start with some gripping friend-or-foe narrative…but not Jeb Stuart)

IFF - radar, only identifies friends

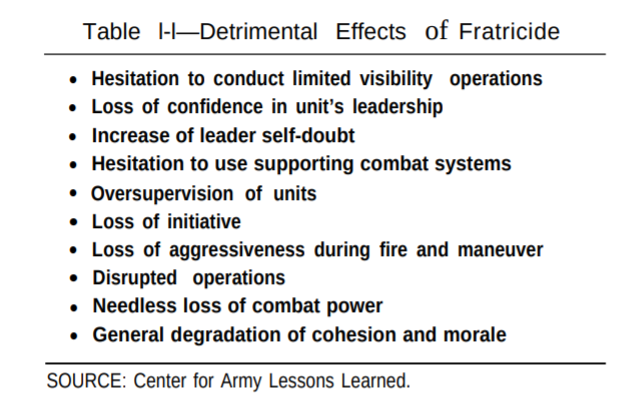

friendly-fire casualties = “fratricide”

“24% of of all U.S. combat fatalities in the [Persian Gulf] war were caused by friendly fire…much higher than the nominal two percent rate frequently cited in the military literature.” [1] Reasons for this include that “total U.S. losses were very low, thus the percentage of fratricides appeared abnormally high” and “near-absolute dominance of the battlefield by the U.S. meant that only U.S. rounds were flying through the air”

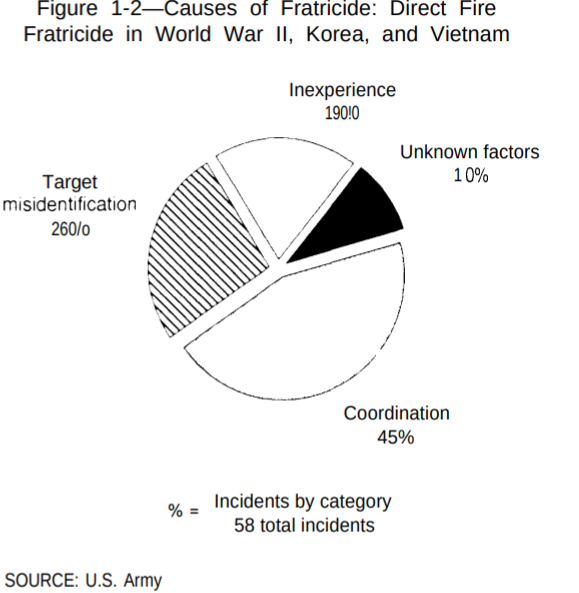

26% of fratricides among U.S. forces from WWII to Vietnam were from target misidentification; other 74% due to combo of factors like “coordination” (being in the wrong place at the wrong time), or lack of fire discipline.

patriot missiles… https://www.latimes.com/archives/la-xpm-2003-apr-21-war-patriot21-story.html

https://en.wikipedia.org/wiki/List_of_friendly_fire_incidents

“The problem is our weapons can kill at a greater range than we can identify a target as friend or foe,” Army Maj. Bill McKean, the operation manager of the Joint Combat Identification Advanced Concept Technology Demonstration, told the American Forces Press Service. [3]

Medical misdiagnosis

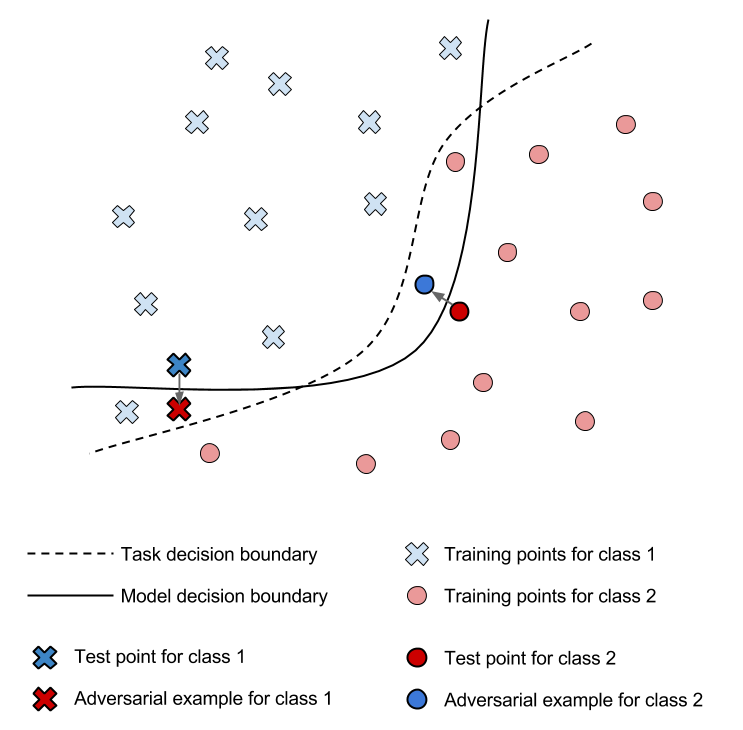

Hacking Classifiers: Adversarial Examples

show the panda example…

lots of little changes add up

cleverhans blog explanation pic: (where “task decision boundary” = “how a human would do it” or “what the answer should be”)

”}

”}

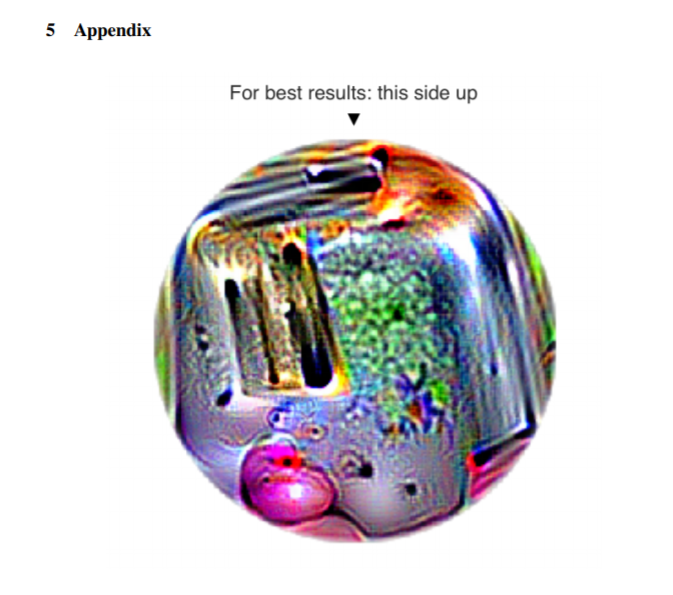

Anh Nguyen + Jeff Clune’s example (2014): https://youtu.be/M2IebCN9Ht4

security concerns

turtle <–> gun example

Demo: http://jlin.xyz/advis/

Adversarial Patch : https://arxiv.org/abs/1712.09665

adversarial patch live: https://youtu.be/MIbFvK2S9g8

GANs…

Adversarial robustness survey: “Opportunities and Challenges in Deep Learning Adversarial Robustness: A Survey” (July 3, 2020): “We provide a taxonomy to ==classify== adversarial attacks and defenses and formulate for the Robust Optimization problem in a min-max setting, and divide it into 3 subcategories, namely: Adversarial (re)Training, Regularization Approach, and Certified Defenses. “

Do I want to tackle “passing as”?

opposite of adversarial examples? Or is it the same thing? camouflage, disguise

…Where to put Google’s ‘gorilla’ snafu?

https://twitter.com/jackyalcine/status/615329515909156865

https://www.theverge.com/2018/1/12/16882408/google-racist-gorillas-photo-recognition-algorithm-ai

Gig Workers (labor classification)

Uber in California, others in Minnesota [2]

Thus Uber is not actually set up as a taxi company with employee drivers. It is more like a rider-finding service for independent drivers. (The drivers are the customers of Uber, the riders are the customers of the drivers.)

In Psychology Too / Healing

training ourselves that what’s happening now is not the same as what happened in our childhoods

References

[1] U.S. Congress, Office of Technology Assessment, Who Goes There: Friend or Foe?, OTA-ISC-537 (Washington, DC: U.S. Government Printing Office, June 1993). https://www.airforcemag.com/PDF/DocumentFile/Documents/2005/Fratricide_OTA_060193.pdf

[2] Misclassification of Employees as Independent Contractors, OFFICE OF THE LEGISLATIVE AUDITOR STATE OF MINNESOTA, November 2007.

[3] https://abcnews.go.com/US/story?id=2985261&page=1

[GSS14] Goodfellow, I. J., Shlens, J., & Szegedy, C. (2014). Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572.

[SZS13] Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., Erhan, D., Goodfellow, I., & Fergus, R. (2013). Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199.